EXPERIENTAL DESIGN

Aurelia Regina Sutjahjokartiko, (0329953)

Experiental Design

WEEK 1 - Tutorial (Set Up Vuforia)

Set Up Vuforia, Create Database key and Unity

1. Create an account on Vuforia Developer

2. Create License Manager → get development key→ give app name and confirm

3. Target Manager → Add Target → create target by putting the image → Download Database (All)

Inside Unity

1. Build Setting → player setting → Vuforia Augmented Reality

2. Right click on layer → Vuforia → AR Camera → Import

3. Resources [asset] → open vuforia configuration → Global (App Lisence Key) → Database (Checklist own Database and ACTIVE)

4. Right click on layer → Vuforia → Image → Predefined → copy paste own database

5. Under image → place something

6. Under image → Advanced → image tracking

7. Delete the main camera

WEEK 2 - Exercise (User Journey/Mapping)

The place to a case study: Taylor's Library

WEEK 5 - Consultation (Experiental Design Proposal)

Feedback - 24 September 2018

Need more idea for Augmented Reality, because the idea that proposed is too mainstream and not useful for AR function

WEEK 6 - Consultation (Experiental Design Proposal)

Feedback - 1 October 2018

My idea is good and useful, but there is no AR function inside there. Maybe it is good for mobile apps not AR. Need to rethink the direction to make it useful. Still in the same genre (which is politic), but maybe how to encourage new voters to get know the candidate.

WEEK 8 - Consultation & Tutorial (Experiental Design Proposal)

Tutorial - 16 October 2018

To insert video after scan the target

1. ImageTarget (layer) → rename quad with "vidplayer1" → insert video

2. go to the "Deflaut trackable event....." for edit the code

3.(inside script) add "using.UnityEngine.Video" → public VideoPlayer vid1

4. [inside void start()] vid1.stop () to make the video stop at first

5. [inside on trackable found] "if........" {vid1. play()}

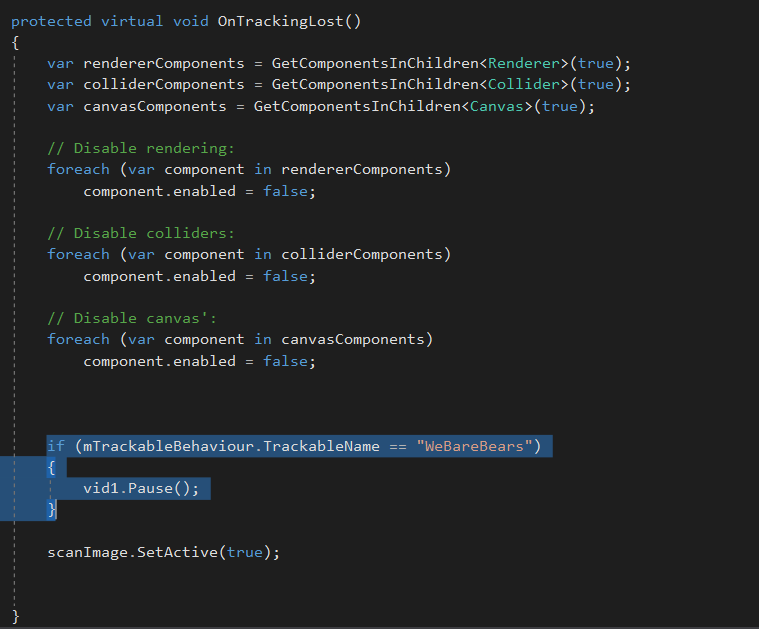

6. [inside on trackable lost] "if......."{vid1.pause()}

Feedback - 18 October 2018

Mr Razif said the direction of the new proposal is a bit clearer than the previous proposal. However, for the implementation of the augmented reality is should more beyond than just click and pop up.

WEEK 9 - Tutorial (Raycast)

To make the 3d Object click-able and pop up video

1. Inside the ImageTarget (on layer) put the "clickScript"

2. Copy paste the script from Mr Razif

3. Create public GameObject sword; public GameObject vid2;

4. [on void start()] vid2.SetActive(false);

5. Make few changes on ray ray camera → Ray ray = Camera.main.ScreenPointToRay(Input.mousePosition);

6. Set the 3d object (sword) into player tag → if (hit.collider.tag == "Player")

7. [Inside if (hit.collider.tag == "Player") ] → vid2.SetActive(true); and sword.SetActive(false);

8. Add box collider inside 3D object and move the placement of box colider. So, the 3D object can be clickable

Compilation of 4 Exercise in one AR

1ST Exercise = Scan target

2ND Exercise = Intro Page and Outro Page

3RD Exercise = Video after Scan

4RD Exercise = click 3d object, pop up video

Final Project (Borobudur 101)

Google Link Video : https://drive.google.com/open?id=1iBV-4Wmt7f9EQTibub9TLOr2cVnj5RIM

Experiental Design

WEEK 1 - Tutorial (Set Up Vuforia)

Set Up Vuforia, Create Database key and Unity

1. Create an account on Vuforia Developer

2. Create License Manager → get development key→ give app name and confirm

3. Target Manager → Add Target → create target by putting the image → Download Database (All)

Inside Unity

1. Build Setting → player setting → Vuforia Augmented Reality

2. Right click on layer → Vuforia → AR Camera → Import

3. Resources [asset] → open vuforia configuration → Global (App Lisence Key) → Database (Checklist own Database and ACTIVE)

4. Right click on layer → Vuforia → Image → Predefined → copy paste own database

5. Under image → place something

6. Under image → Advanced → image tracking

7. Delete the main camera

WEEK 2 - Exercise (User Journey/Mapping)

The place to a case study: Taylor's Library

- Goals :

- borrow/read a book

- sleep / relax

- groupwork

- work/study individually

- print, scan, and photocopy

- The Journey :

- enter the library

- tap student ID (through the entrance machinery)

- Search Taylor’s catalogue (using your phone, or the provided computer) / directly go to the bookshelf / ask the librarian

- take note of the number of the book you desire

- go to the specific shelf with the number range

- browse the title of the book in the specific row

- Take the book (read or borrow)

- if you decide to borrow, go to level 2 and check the book out by using the self check out machine. Take the receipt and take note of the returning date.

- Go out of the library, scan your ID again

- after 2 weeks :

- (if you have finished reading) return the book to the 24 hours study room using the self return service. take the receipt (keep it or throw it)

- (if you’d like to extend the date) Access Taylor’s library website, Login, and extend the date

- Idea to Improve :

- Tap student ID :

biometric / face scan

- Finding the book :

- Label the shelfs based on the category of the books ex. architecture, photography, culinary etc.

- Improve the numbering system / the book labelling? and organize the book (levelno.shelfno.rowno.bookno)

- Ipad, map, directory system

- or a keypad where you can input your book number and then the shelf could light up (some indications that can direct you) (if multiple people are borrowing at the same time, we came up with a monitor system signifying your turn / queue. )

- Checkout :

- Self checkout using phone (maybe this is one of the app function)

- The tutorial for the machine, the steps and images is not clear

- Returning the book :

Either extend Taylor’s mobile app function or construct a new library app (equipped with reminders reminding when the book should be returned, book recommendations based on your checkout history, etc.)

WEEK 5 - Consultation (Experiental Design Proposal)

Feedback - 24 September 2018

Need more idea for Augmented Reality, because the idea that proposed is too mainstream and not useful for AR function

WEEK 6 - Consultation (Experiental Design Proposal)

Feedback - 1 October 2018

My idea is good and useful, but there is no AR function inside there. Maybe it is good for mobile apps not AR. Need to rethink the direction to make it useful. Still in the same genre (which is politic), but maybe how to encourage new voters to get know the candidate.

WEEK 8 - Consultation & Tutorial (Experiental Design Proposal)

Tutorial - 16 October 2018

To insert video after scan the target

1. ImageTarget (layer) → rename quad with "vidplayer1" → insert video

2. go to the "Deflaut trackable event....." for edit the code

3.(inside script) add "using.UnityEngine.Video" → public VideoPlayer vid1

4. [inside void start()] vid1.stop () to make the video stop at first

5. [inside on trackable found] "if........" {vid1. play()}

6. [inside on trackable lost] "if......."{vid1.pause()}

Feedback - 18 October 2018

Mr Razif said the direction of the new proposal is a bit clearer than the previous proposal. However, for the implementation of the augmented reality is should more beyond than just click and pop up.

WEEK 9 - Tutorial (Raycast)

To make the 3d Object click-able and pop up video

1. Inside the ImageTarget (on layer) put the "clickScript"

2. Copy paste the script from Mr Razif

3. Create public GameObject sword; public GameObject vid2;

4. [on void start()] vid2.SetActive(false);

5. Make few changes on ray ray camera → Ray ray = Camera.main.ScreenPointToRay(Input.mousePosition);

6. Set the 3d object (sword) into player tag → if (hit.collider.tag == "Player")

7. [Inside if (hit.collider.tag == "Player") ] → vid2.SetActive(true); and sword.SetActive(false);

8. Add box collider inside 3D object and move the placement of box colider. So, the 3D object can be clickable

Compilation of 4 Exercise in one AR

1ST Exercise = Scan target

2ND Exercise = Intro Page and Outro Page

3RD Exercise = Video after Scan

4RD Exercise = click 3d object, pop up video

Final Project (Borobudur 101)

Google Link Video : https://drive.google.com/open?id=1iBV-4Wmt7f9EQTibub9TLOr2cVnj5RIM

Comments

Post a Comment